After you’ve got OpenShift installed with Agent-Based Installer in an airgapped environment, here’s what you generally need to do.

- Validating the cluster health

- Connecting it to your internal/offline registries

- Configuring Operators and CatalogSources for offline mode

- Setting up authentication, storage, monitoring, and backups

Here’s a post-install checklist tailored for airgapped ABA deployments:

1. Verify Cluster Health (use Web UI as much as possible)

#Check all cluster operators (see them on Web UI)

oc get co

#Ensure all nodes are Ready (see them on Web UI)

oc get nodes

#Review events for any degraded components (see them on Web UI)

oc get events -A --sort-by=.lastTimestamp2. Configure Internal Image Registry (Optional)

If you have a external image registry (e.g., Quay), skip this step. Most airgapped installations actually have a container registry that is sitting outside of OpenShift. Unless you plan to use the OpenShift internal registry, make it available and backed by persistent storage:

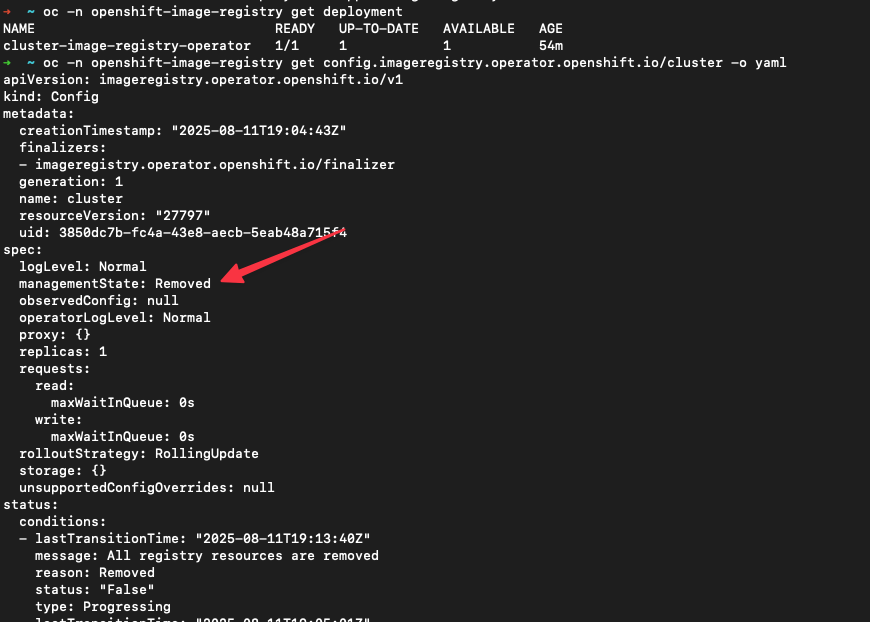

oc patch configs.imageregistry.operator.openshift.io/cluster --type merge \

-p '{"spec":{"managementState":"Managed","storage":{"pvc":{"claim":"<pvc-name>"}}}}'Now if you still want a internal image registry, it comes installed by default as a default cluster operator in “openshift-image-registry” namespace. After a fresh install, especially in bare-metal, agent-based, or disconnected setups — the registry will be Available=False until you configure storage. By default, it’s in Removed management state to avoid filling up the bootstrap disk. See image below.

Here’s how you can enable the registry & configure storage. Pick a available RWX-capable storage backend (e.g., ODF, NFS or PVC via your CSI).

# 1. Using a PVC

# make sure registry-storage is available first

oc patch configs.imageregistry.operator.openshift.io/cluster --type merge -p '{

"spec": {

"managementState": "Managed",

"storage": {

"pvc": {

"claim": "registry-storage"

}

}

}

}'

# OR

# 2. Using a NFS backend

oc patch configs.imageregistry.operator.openshift.io/cluster --type merge -p '{

"spec": {

"managementState": "Managed",

"storage": {

"nfs": {

"path": "/exports/registry",

"server": "nfs.example.com"

}

}

}

}'

# OR

# 3. Using ephemeral storage (short-lived) - not for production.

# Turn it on and use emptyDir

oc patch configs.imageregistry.operator.openshift.io/cluster --type merge -p '{

"spec": {

"managementState": "Managed",

"storage": { "emptyDir": {} },

"replicas": 1

}

}'

# (Optional) Expose a public route so you can log in/push from outside the cluster

oc patch configs.imageregistry.operator.openshift.io/cluster --type merge -p '{

"spec": { "defaultRoute": true }

}'

# Check it came up, and verify after configuration

oc get clusteroperator image-registry

oc -n openshift-image-registry get pods

oc -n openshift-image-registry get route/default-routeTake note that ephemeral means volatile: any registry restart/eviction wipes all stored image layers. Fine for labs/short‑lived testing, not for prod.

3. Mirror OperatorHub (Offline CatalogSources)

First, you need to disable default online sources which your marketplace is trying to reach. It’s not optional in my point of view since you’ll get noisy errors and slow operator resolution attempts. In a truly air‑gapped/disconnected cluster, leaving the default OperatorHub sources (redhat‑operators, certified‑operators, community‑operators, redhat‑marketplace) enabled makes the cluster constantly try (and fail) to reach the internet. That clutters Insights/alerts, slows console browsing of Operators, and can confuse users.

#Disable the default online sources:

oc patch OperatorHub cluster --type json \

-p='[{"op":"add","path":"/spec/disableAllDefaultSources","value":true}]'Use oc-mirror –v2 to create an offline mirror of required operators and push them into your external container registry, see my other blog post for more explicit details.

oc-mirror -c isc-additional-operators.yaml file://local/path --v2Now you need a way for your OperatorHub to be populated with Operators that you will be using. Operators is a great way to manage your certified, tested and/or validated applications that you’ll be using on the cluster.

Create a CatalogSource pointing to your external container registry (I previously posted in my other blog post). Apply the following YAML.

apiVersion: operators.coreos.com/v1alpha1

kind: CatalogSource

metadata:

name: redhat-operators-offline

namespace: openshift-marketplace

spec:

sourceType: grpc

image: registry.kubernetes.day/ocp419/redhat/redhat-operator-index:v4.19

displayName: Red Hat Operators (Offline)

publisher: Red Hat

updateStrategy:

registryPoll:

interval: 43200m

---

apiVersion: operators.coreos.com/v1alpha1

kind: CatalogSource

metadata:

name: community-operators-offline

namespace: openshift-marketplace

spec:

sourceType: grpc

image: registry.kubernetes.day/ocp419/redhat/community-operator-index:v4.19

displayName: Red Hat Community Operators (Offline)

publisher: Red Hat

updateStrategy:

registryPoll:

interval: 10m

---

apiVersion: operators.coreos.com/v1alpha1

kind: CatalogSource

metadata:

name: certified-operators-offline

namespace: openshift-marketplace

spec:

sourceType: grpc

image: registry.kubernetes.day/ocp419/redhat/certified-operator-index:v4.19

displayName: Red Hat Certified Operators (Offline)

publisher: Red Hat

updateStrategy:

registryPoll:

interval: 43200m

---

apiVersion: operators.coreos.com/v1alpha1

kind: CatalogSource

metadata:

name: marketplace-operators-offline

namespace: openshift-marketplace

spec:

sourceType: grpc

image: registry.kubernetes.day/ocp419/redhat/redhat-marketplace-index:v4.19

displayName: Red Hat Marketplace Operators (Offline)

publisher: Red Hat

updateStrategy:

registryPoll:

interval: 43200mVerify that your new catalogsources has been created.

oc get operatorhub cluster -o yaml | grep -i disableAllDefaultSources oc -n openshift-marketplace get catalogsource

4. Configure Internal Registry Pull Secrets

Skip this step if you didn’t do step #2. This will be used to pull images from your internal cluster.

1) Create a ServiceAccount to pull from the internal registry

Use a “shared” project (or the project that hosts your images). Grant it image‑pull permissions where needed.

NS=registry-shared

oc new-project $NS 2>/dev/null || true

oc create sa registry-puller -n $NS

# Allow this SA to pull images cluster‑wide (optional/wide)

oc adm policy add-cluster-role-to-user system:image-puller "system:serviceaccount:${NS}:registry-puller"

# Or, minimally, allow it to pull from a specific project that holds images:

# oc policy add-role-to-user system:image-puller "system:serviceaccount:${NS}:registry-puller" -n <source-project>

2. Mint a long-lived token for that SA

# 1 year token; adjust as you like (e.g., 720h for 30 days)

# adjust the <namespace> value

TOKEN=$(oc create token registry-puller -n $NS --duration=8760h)3. Extract the current cluster pull-secret to a file

TMP=/tmp/.dockerconfigjson

oc get secret/pull-secret -n openshift-config -o jsonpath='{.data.\.dockerconfigjson}' | base64 -d > $TMP4) Merge the internal registry auth into that file

This adds an auths[“”] entry using any username (we’ll use serviceaccount) and the SA token as the password.

USER=serviceaccount

AUTH=$(printf "%s:%s" "$USER" "$TOKEN" | base64 -w0)

# Add/overwrite the entry for your registry route

jq --arg reg "$REGISTRY" --arg auth "$AUTH" '

.auths[$reg] = {auth: $auth}

' "$TMP" > ${TMP}.new && mv ${TMP}.new "$TMP"If your nodes will pull via the in‑cluster service (image-registry.openshift-image-registry.svc:5000) as well, repeat the jq line with –arg reg image-registry.openshift-image-registry.svc:5000.

5) Patch the cluster pull-secret

oc set data secret/pull-secret -n openshift-config \

--from-file=.dockerconfigjson=$TMP6) Verify that you’ have the internal image registry and your external container registry configured

oc get secret/pull-secret -n openshift-config -o json | jq -r '.data[".dockerconfigjson"]' | base64 -d | jq '.auths | keys[]'5. Set Up Authentication

Integrate with htpasswd, github, gitlab, LDAP, or another IdP:

htpasswd -c -B -b users.htpasswd admin P@ssw0rd

oc create secret generic htpasswd-secret --from-file=htpasswd=users.htpasswd -n openshift-config

oc apply -f oauth.yaml---

# oauth.yaml

#

apiVersion: config.openshift.io/v1

kind: OAuth

metadata:

name: cluster

spec:

identityProviders:

- name: Login Credentials

mappingMethod: claim

type: HTPasswd

htpasswd:

fileData:

name: htpasswd-secretIf you want to bind this user as a cluster-admin, use the command below. Replace <username> with your username e.g., admin.

oc adm policy add-cluster-role-to-user cluster-admin <username> --rolebinding-name=cluster-admin6. Configure Storage (CSI, ODF, LVM)

If you have ODF, configure it now (block/file/object), and define it as default storage class. If it’s SNO, install LVM Storage from OperatorHub which will automatically claim the other disks that are not in use (/dev/sdb).

Set a default StorageClass for PVCs:

oc patch storageclass <sc-name> -p '{"metadata": {"annotations":{"storageclass.kubernetes.io/is-default-class":"true"}}}'7. Monitoring & Logging

Mirror Monitoring & Logging operator images. This is found in my other imagesetconfig (isc-additional/operators.yaml). I’d use lokistack.

Enable monitoring stack in disconnected mode (mirror necessary images). If needed, deploy ClusterLogging using offline catalog.

8. Backup & Disaster Recovery

Take an initial etcd backup. Check out this post about backup and restore (using OADP).

oc adm cluster-backup /path/to/backupIn my opinion, having a etcd backup would be meaningless after a week. Also, schedule cron backup offline too.

9. Configure Cluster Updates (Offline)

Skip this step if you’re not considering upgrading at the moment. However go to the Web UI and turn off Cluster Setting’s Channel to Not configured.

Mirror OpenShift release images to your internal registry. Update the ClusterVersion to point to your internal mirror, or use the Web UI to define your channel (see the image above). Don’t forget to apply the signature yaml to validate the images during updates. I’ll write about this on another posts.

Then run the following to start OpenShift upgrade.

oc adm upgrade --to-image=registry.airgap.local/ocp4/openshift4:4.xx.x-x86_64Make sure you run through Red Hat OpenShift Container Platform Update Graph to run through what path is possible to be upgraded to.

10. Harden & Tune Security

- Install Compliance Operator (and run CIS Benchmark)

- Set audit logging policy.

- Configure NetworkPolicy. You could set deny NetworkPolicy in all namespaces. 🙂

- Review all SCC (oc get scc)

- Restrict default project permissions.

11. Verify via WebUI / CLI

Finally, let’s do a verification if you have any pods that are in error state via Web UI, or CLI. You can also choose to run the cluster health check & recovery script.

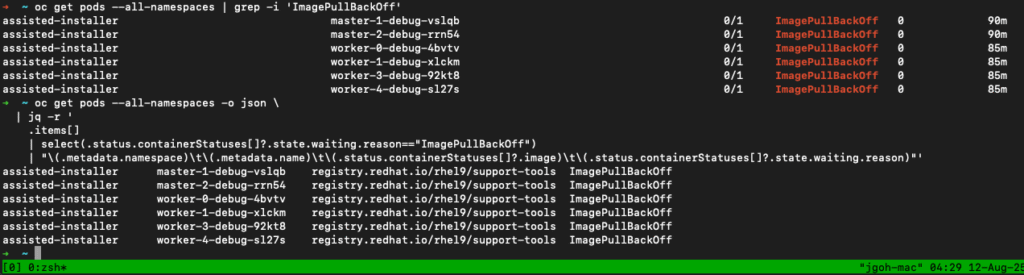

oc get pods -A| grep -i 'ImagePullBackOff'

#OR

watch -n 5 "oc get pods -A| grep -i 'ImagePullBackOff'"For a more detailed view (namespace, pod name, container, and image):

oc get pods --all-namespaces -o json \

| jq -r '

.items[]

| select(.status.containerStatuses[]?.state.waiting.reason=="ImagePullBackOff")

| "\(.metadata.namespace)\t\(.metadata.name)\t\(.status.containerStatuses[]?.image)\t\(.status.containerStatuses[]?.state.waiting.reason)"'

You might have similar issue to mine, here’s how to fix it. Apply this YAML. (DO NOT RUN IN PRODUCTION – read below!)

- These rules work registry‑wide: any pull from

registry.redhat.io/*orquay.io/*will be rewritten to your mirror keeping the path after the registry. With the config below, a pull ofregistry.redhat.io/rhel9/support-tools:latestbecomesregistry.kubernetes.day/ocp419/rhel9/support-tools:latest. - This assumes your mirror actually stores images under that layout (i.e., you’ve pushed them to registry.kubernetes.day/ocp419/

). If your mirror uses different repo names, you’ll need repository‑specific entries instead.

# idms-support-tools.yaml

# Mirror by DIGEST (authoritative) for entire registries

apiVersion: config.openshift.io/v1

kind: ImageDigestMirrorSet

metadata:

name: mirror-quay-and-registry-redhat

spec:

imageDigestMirrors:

- source: registry.redhat.io

mirrors:

- registry.kubernetes.day/ocp419

- source: quay.io

mirrors:

- registry.kubernetes.day/ocp419

---

# itms-support-tools.yaml (optional but handy for tag-based pulls)

# Optional: also mirror TAG lookups (handy while you’re stabilizing)

apiVersion: config.openshift.io/v1

kind: ImageTagMirrorSet

metadata:

name: mirror-quay-and-registry-redhat

spec:

imageTagMirrors:

- source: registry.redhat.io

mirrors:

- registry.kubernetes.day/ocp419

- source: quay.io

mirrors:

- registry.kubernetes.day/ocp419After that reload the pod to restart those pulls.

Also, you’ll notice when you apply ImageDigestMirrorSet / ImageTagMirrorSet, the Machine Config Operator (MCO) updates the nodes’ /etc/containers/registries.conf to honor those mirrors. That change is delivered via a new rendered MachineConfig, which rolls your nodes (masters one‑by‑one, then workers by pool). Reboots/evictions = lots of pods restarting.

I’ll write another blog post on how you can control these blast radius by pausing the mcp to apply your mirror objects and unpause.

Make sure,

- Trust: you configure to trust

registry.kubernetes.day’s TLS (MachineConfig or additionalTrustBundle baked at install). - Auth: the cluster pull‑secret (

openshift-config/pull-secret) includes creds forregistry.kubernetes.day(you already merged these earlier). - Images exist: the repos you’ll pull actually exist in the mirror under

quayadmin/<same path>.