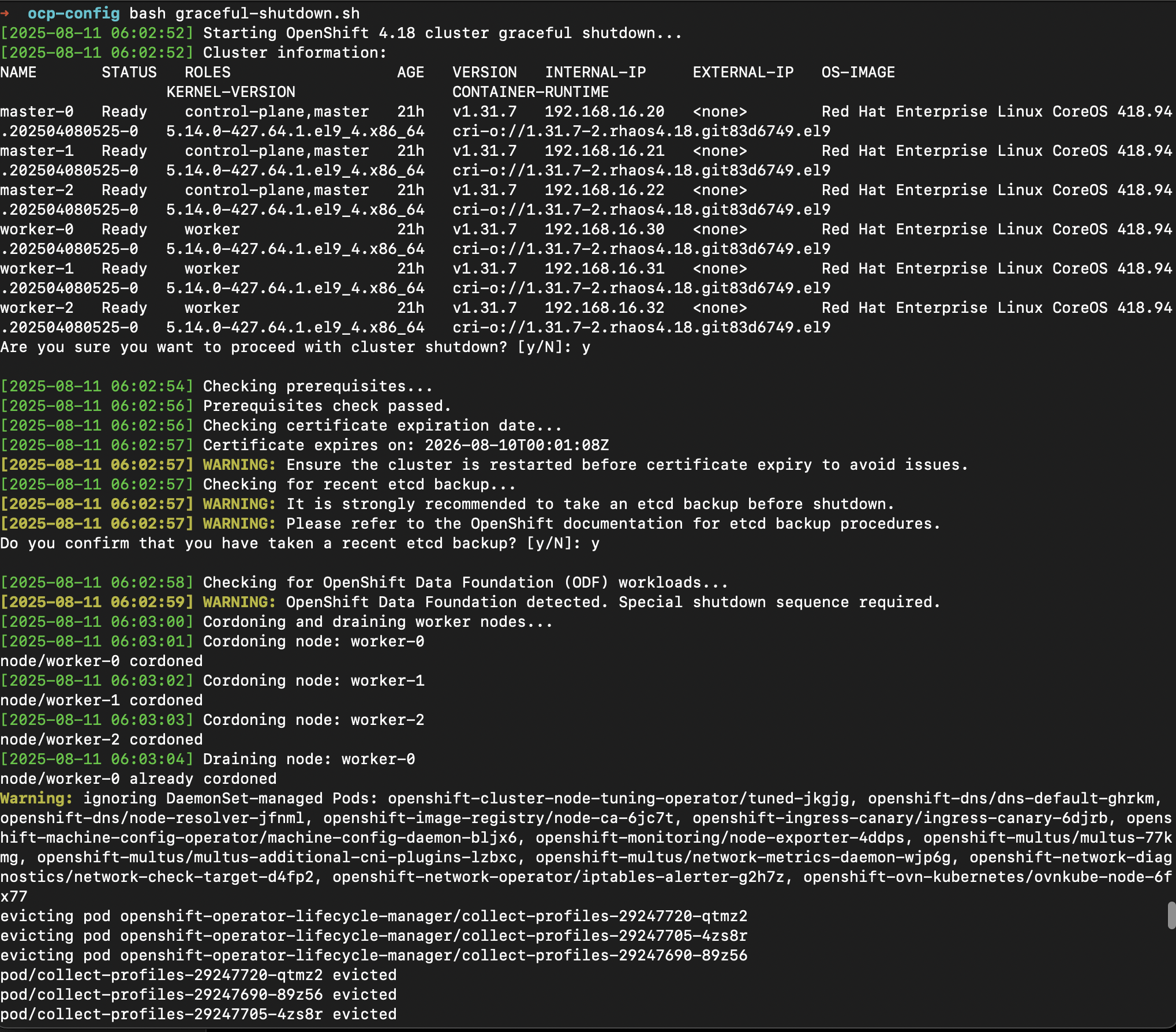

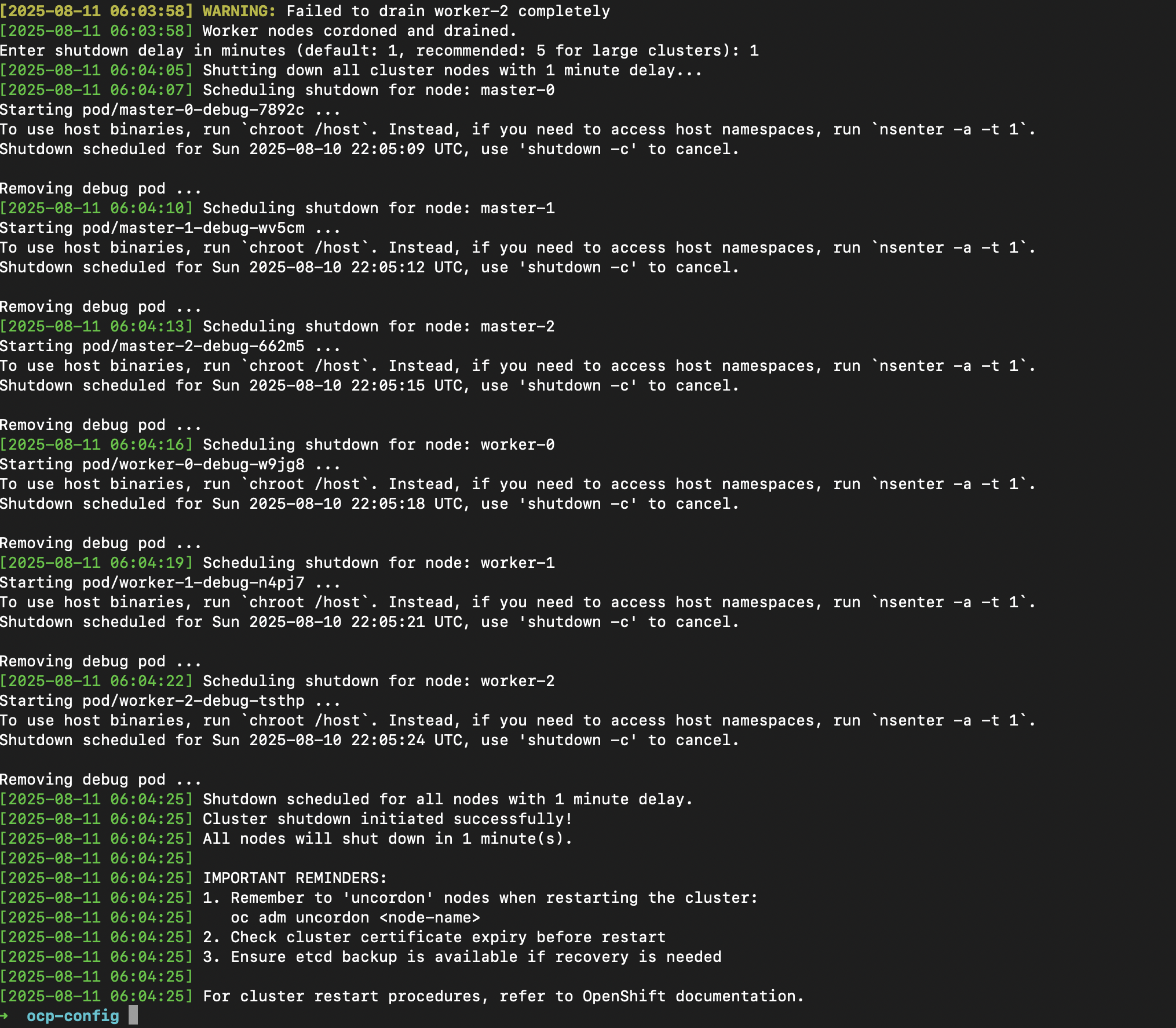

Got a pretty nifty script that helps to automate your cluster shutdown in one-go.

It does all the prerequisites checks and warns you (including etcd backup), so you don’t have to worry if you missed out anything. And evict the pods automatically.

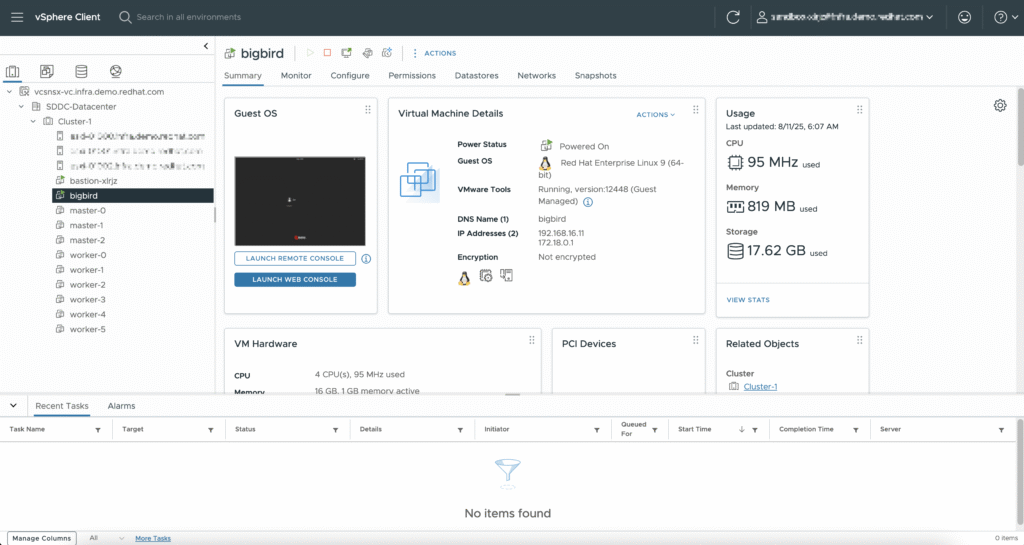

All is done as shown in my vCenter. 🙂

Notes

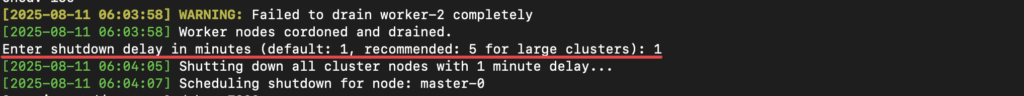

- There is a bug on the script where the API shutdown before all the worker nodes get issued a shutdown command. In this case, you should ssh into the worker nodes to shut them down manually.

#!/bin/bash

# OpenShift 4.18 Graceful Shutdown Script for Air-gapped Environment

# Compatible with RHEL 9.6

# Script to gracefully shutdown OpenShift cluster

set -euo pipefail

# Color codes for output

RED='\033[0;31m'

GREEN='\033[0;32m'

YELLOW='\033[1;33m'

NC='\033[0m' # No Color

# Logging function

log() {

echo -e "${GREEN}[$(date '+%Y-%m-%d %H:%M:%S')]${NC} $1"

}

warn() {

echo -e "${YELLOW}[$(date '+%Y-%m-%d %H:%M:%S')] WARNING:${NC} $1"

}

error() {

echo -e "${RED}[$(date '+%Y-%m-%d %H:%M:%S')] ERROR:${NC} $1"

exit 1

}

# Confirmation prompt

confirm() {

read -p "$1 [y/N]: " -r

echo

[[ $REPLY =~ ^[Yy]$ ]]

}

# Check prerequisites

check_prerequisites() {

log "Checking prerequisites..."

# Check if oc command is available

if ! command -v oc &> /dev/null; then

error "OpenShift CLI (oc) not found. Please install and configure oc command."

fi

# Check if we have cluster-admin access

if ! oc auth can-i '*' '*' --all-namespaces &> /dev/null; then

error "You need cluster-admin privileges to run this script."

fi

# Check if cluster is accessible

if ! oc get nodes &> /dev/null; then

error "Cannot access the cluster. Please check your connection and authentication."

fi

log "Prerequisites check passed."

}

# Check certificate expiration

check_certificate_expiry() {

log "Checking certificate expiration date..."

cert_expiry=$(oc -n openshift-kube-apiserver-operator get secret kube-apiserver-to-kubelet-signer -o jsonpath='{.metadata.annotations.auth\.openshift\.io/certificate-not-after}' 2>/dev/null || echo "")

if [[ -n "$cert_expiry" ]]; then

log "Certificate expires on: $cert_expiry"

warn "Ensure the cluster is restarted before certificate expiry to avoid issues."

else

warn "Could not retrieve certificate expiry information."

fi

}

# Take etcd backup

take_etcd_backup() {

log "Checking for recent etcd backup..."

warn "It is strongly recommended to take an etcd backup before shutdown."

warn "Please refer to the OpenShift documentation for etcd backup procedures."

if ! confirm "Do you confirm that you have taken a recent etcd backup?"; then

error "Please take an etcd backup before proceeding with cluster shutdown."

fi

}

# Cordon and drain worker nodes

drain_worker_nodes() {

log "Cordoning and draining worker nodes..."

# Get worker nodes

worker_nodes=$(oc get nodes -l node-role.kubernetes.io/worker -o jsonpath='{.items[*].metadata.name}' 2>/dev/null || echo "")

if [[ -z "$worker_nodes" ]]; then

warn "No dedicated worker nodes found. This might be a compact cluster."

return 0

fi

# Cordon all worker nodes first

for node in $worker_nodes; do

log "Cordoning node: $node"

oc adm cordon "$node" || warn "Failed to cordon $node"

done

# Drain worker nodes

for node in $worker_nodes; do

log "Draining node: $node"

oc adm drain "$node" --delete-emptydir-data --ignore-daemonsets=true --timeout=15s --force || warn "Failed to drain $node completely"

done

log "Worker nodes cordoned and drained."

}

# Handle special workloads (if ODF/Ceph is present)

handle_special_workloads() {

log "Checking for OpenShift Data Foundation (ODF) workloads..."

# Check if ODF is present

if oc get namespace openshift-storage &>/dev/null; then

warn "OpenShift Data Foundation detected. Special shutdown sequence required."

# Get all PVCs using ODF storage classes

odf_pvcs=$(oc get pvc -A -o json | jq -r '.items[] | select(.spec.storageClassName | inside("ocs-storagecluster-ceph")) | .metadata.namespace + "/" + .metadata.name' 2>/dev/null || echo "")

if [[ -n "$odf_pvcs" ]]; then

log "Found workloads using ODF storage. Shutting down pods using ODF storage..."

# Find and shutdown pods using ODF storage

while IFS= read -r pvc; do

namespace=$(echo "$pvc" | cut -d'/' -f1)

claimname=$(echo "$pvc" | cut -d'/' -f2)

pods=$(oc get pods -n "$namespace" -o json | jq -r --arg claimname "$claimname" '.items[] | select(.spec.volumes[]? | select(.persistentVolumeClaim.claimName == $claimname)) | .metadata.name' 2>/dev/null || echo "")

for pod in $pods; do

log "Deleting pod $pod in namespace $namespace (using ODF storage)"

oc delete pod "$pod" -n "$namespace" --grace-period=30 || warn "Failed to delete pod $pod"

done

done <<< "$odf_pvcs"

fi

fi

}

# Shutdown cluster nodes

shutdown_nodes() {

local shutdown_delay=${1:-1}

log "Shutting down all cluster nodes with ${shutdown_delay} minute delay..."

# Get all nodes

all_nodes=$(oc get nodes -o jsonpath='{.items[*].metadata.name}')

if [[ -z "$all_nodes" ]]; then

error "No nodes found in the cluster."

fi

# For larger clusters (10+ nodes), use longer delay

node_count=$(echo $all_nodes | wc -w)

if [[ $node_count -ge 10 ]] && [[ $shutdown_delay -eq 1 ]]; then

shutdown_delay=10

warn "Large cluster detected ($node_count nodes). Using ${shutdown_delay} minute shutdown delay."

fi

# Shutdown all nodes

for node in $all_nodes; do

log "Scheduling shutdown for node: $node"

oc debug node/"$node" -- chroot /host shutdown -h "$shutdown_delay" || warn "Failed to schedule shutdown for $node"

done

log "Shutdown scheduled for all nodes with ${shutdown_delay} minute delay."

}

# Main shutdown function

main() {

log "Starting OpenShift 4.18 cluster graceful shutdown..."

# Show cluster info

log "Cluster information:"

oc get nodes -o wide | head -10

# Final confirmation

if ! confirm "Are you sure you want to proceed with cluster shutdown?"; then

log "Shutdown cancelled by user."

exit 0

fi

# Execute shutdown steps

check_prerequisites

check_certificate_expiry

take_etcd_backup

handle_special_workloads

drain_worker_nodes

# Ask for custom shutdown delay

read -p "Enter shutdown delay in minutes (default: 1, recommended: 5 for large clusters): " -r custom_delay

delay=${custom_delay:-1}

# Validate delay is a number

if ! [[ "$delay" =~ ^[0-9]+$ ]]; then

warn "Invalid delay specified. Using default delay of 1 minute."

delay=1

fi

shutdown_nodes "$delay"

log "Cluster shutdown initiated successfully!"

log "All nodes will shut down in $delay minute(s)."

log ""

log "IMPORTANT REMINDERS:"

log "1. Remember to 'uncordon' nodes when restarting the cluster:"

log " oc adm uncordon <node-name>"

log "2. Check cluster certificate expiry before restart"

log "3. Ensure etcd backup is available if recovery is needed"

log ""

log "For cluster restart procedures, refer to OpenShift documentation."

}

# Execute main function

main "$@"

Head over to the other blog post to check cluster health upon bootup!